AI and Legal Advice: When “Good Enough” Isn’t Good Enough it’s disaster!

By HK Lawyer AJ Halkes Barrister-at-Law

Not too long ago, I saw disaster loom on asking ChatGPT questions specific to restaurant and liquor licensing practice in Hong Kong; the answers were BAD!

The responses were specifically wrong in multiple ways, missing key issues, misinterpreting context and offering dangerously incorrect opinions. In one instance, the “advice” was the total opposite of what any expert in licensing would ever say. For the untrained user, the risks of relying on AI looked massive.

As an expert, I could see where AI had got confused, but what worried me was that many potential clients wouldn’t catch errors and would act on them, leading to losses of millions of dollars.

So I gave up on ChatGPT. It wasn’t only technical errors that made it a bad tool; the hedging (some very clear mainstream media bias) and an inability to grasp core issues practically were problematic.

Months later, I tried Grok. The difference was dramatic. Grok handled questions with clearer reasoning, less bias, and provided more useful insight. It seemed to draw less from mainstream media noise and more from facts. It wasn’t perfect, but Grok avoided the most dangerous mistakes ChatGPT made.

So with AI improving rapidly, am I now out of a job?

Like many things in life, it depends.

Is “good enough” really good enough for you?

Alongside AI, we have cheaper and faster “legal advisors” popping up, and what’s offered may look “good enough” until something goes wrong. The AI assisted race to the bottom for “legal advice” has hit a real danger zone in my opinion.

When it goes wrong, when the venture collapses, when the deal fails, or the regulator calls for that “interview” about what you’ve been involved in; that’s when my phone rings.

And then I get asked to do what I’ve done for years: put the toothpaste back in the tube.

Because AI has no idea how to meet that uniquely multi dimensional human challenge.

AI lacks what experienced professionals and experts bring to the table after decades of hard work, AI lacks judgment, AI lacks real-world context, it has none of the instincts to anticipate the unintended or perhaps the type of inquisition that nobody expects (apologies to Monty Python).

And there’s the Dunning-Kruger effect: AI doesn’t know what it doesn’t know and neither do users who rely on it; uneducated or inexpert prompts that are error strewn lead to “rubbish in rubbish out”.

As I see it perception of “good enough” is becoming a false comfort blanket.

And once you’ve fallen into the “good enough” trap, you won’t even know it until it’s too late.

If you need specific input regarding a strategic Hong Kong challenge or related legal matters in the HKSAR you can always DM me and check out my profile at https://www.ajhalkes.com

hashtag#AIandLaw hashtag#LegalTech hashtag#GrokAI hashtag#ChatGPT hashtag#DunningKrugerEffect hashtag#ProfessionalAdvice hashtag#HKLaw hashtag#StrategicAdvice hashtag#ArtificialIntelligence hashtag#HongKongBusiness hashtag#dunningkruger hashtag#regluatorylaw

The government has imposed standards on protective netting around occupied residential properties By HK Lawyer AJ Halkes Barrister-at-Law The government has imposed standards on protective netting around occupied residential properties,...Read More

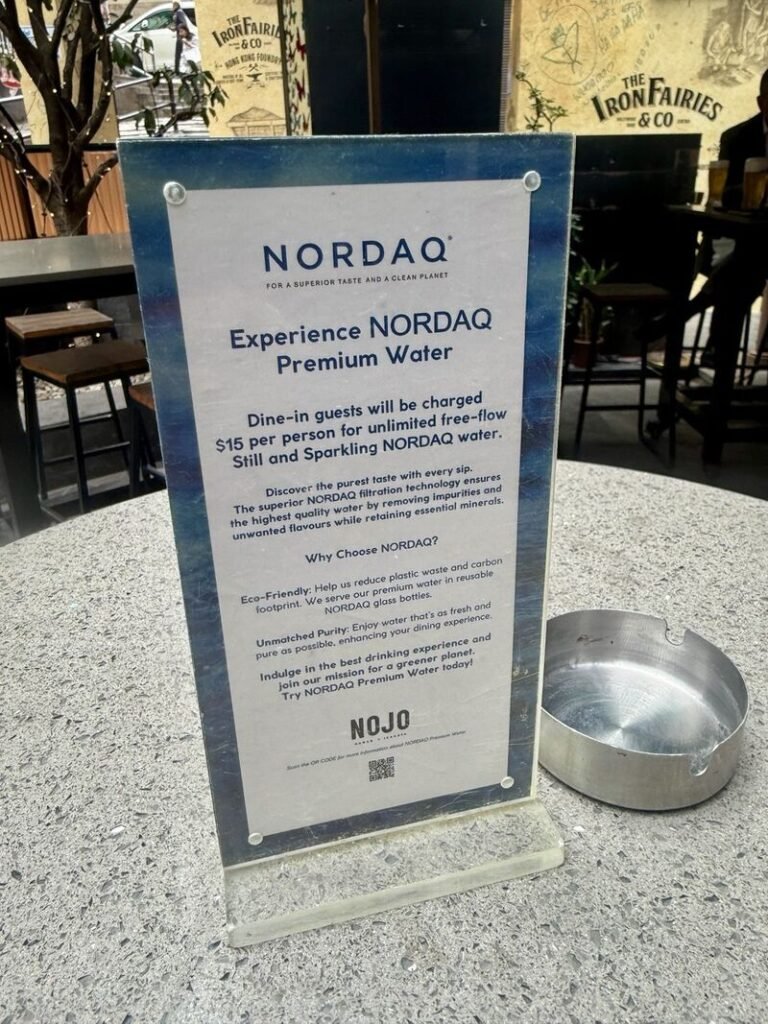

When water is basically free and all F&B outlets have a filtration system – why charge? By HK Lawyer AJ Halkes Barrister-at-Law When water is basically free, and all F&B...Read More

Suffering long term COVID effects is very real for some businesses By HK Lawyer AJ Halkes Barrister-at-Law Suffering long-term COVID effects is very real for some businesses. Even though the...Read More

Hire the right influencers, create the right buzz, now you can sell almost anything! By HK Lawyer AJ Halkes Barrister-at-Law I wrote about this place opening on a site that,...Read More